TUBSRobustCheck - assessing the robustness of semantic segmentation neural networks

A (left) front-car image overlaid with a semantic segmentation prediction of a deep neural network.

A (left) front-car image overlaid with a semantic segmentation prediction of a deep neural network.

Autonomous vehicles need to understand the world around them in order to safely navigate in our complex and highly dynamic city environments. Towards that objective, diverse deep learning approaches have been rather successful. However, recent studies have shown that neural networks can be easily fooled by careful modification of the input image, raising serious concerns about the real robustness of such systems.

During the last two years, I have worked as a research assistant under the supervision of Andreas Bär at the Signal Processing and Machine Learning Group of the Technische Universität Braunschweig. During this time, I worked on diverse topics around the robustness of neural networks. Today, I am very happy to share one of the main results of my work, the TUBSRobustCheck toolbox! TUBSRobustCheck is a PyTorch-based, architecture-agnostic toolbox developed to enable researchers to assess the robustness of their semantic segmentation neural networks against diverse attacks and corruptions.

Our proposed toolbox is divided into two blocks: attacks, which contains various individual and universal adversarial attacks, and corruptions, which implements common image corruptions, as first introduced by Hendrycks et al, 2019.

Lets now dive deep into some capabilities of the TUBSRobustCheck toolbox!

The TUBSRobustCheck Attack toolbox

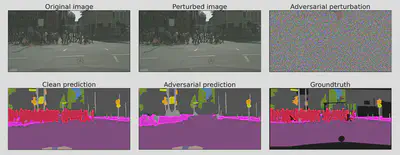

Among others, the proposed toolbox implements the Fast Gradient Sign Method (FGSM) (and its iterative and momentum variants), Generalizable Data-free Universal Adversarial Perturbations (GD-UAP), and the Metzen single image and universal attacks. Below, I show examples that were produced with our toolbox using a SwiftNet as a semantic segmentation neural network.

The top left image shows the original image that a neural network generally receives as input. Without any corruption, a state-of-the-art semantic segmentation neural network will produce a segmentation prediction that resembles the clean prediction at the bottom row left. Note that this prediction is very similar to the actual groundtruth image depicted at the bottom row right.

By applying some attack to it, in this case the Metzen single static image, an adversarial perturbation is computed (top row right). This adversarial perturbation can be added to the original input image to form an adversarial example (top row middle), which still looks like a clean image to humans. However, by feeding the perturbed image to the semantic segmentation network, we observe that the network predicts a scene with distinct semantics, showing the vulnerability of the semantic segmentation network.

This attack was purposefully designed to fool the neural network into predicting a static scene. In addition to that, our toolbox also contemplates other types of attacks, such as the dynamic Metzen:

In this case, instead of fooling the network to produce some pre-defined (static) image, we fool it into misclassifying some target semantic class of the input image. In this case, we produced a targeted adversarial perturbation to fool the neural network into wrongly classifying pixels of pedestrians. (Stop here for a moment and imagine how dangerous it would be to have a system failing/being attacked in this way!)

The TUBSRobustCheck Corruption toolbox

Apart from adversarial attacks, our toolbox also implements diverse corruptions. For that, we followed the work of Hendrycks et al, 2019 and implemented corruptions of four classes: Noise (Gaussian, shot, impulse, and speckle), Blur (defocus, glass, motion, zoom, and Gaussian), Weather (snow, Frost, fog, brightness, and spatter), and Digital (contrast, elastic transform, pixelate, JPEG compression, and saturate). With these classes, we hope to cover some of the most common types of image corruption.

To illustrate the capabilities of our corruption toolbox, below we have some examples:

The idea is that we corrupt images by adding some artifact to them. In turn, these artifacts try to depict real-world scenarios. For instance, the frost weather corruption tries to depict the case where there is frost covering the camera lens. Similarly, the motion blur tries to depict the case where some motion affects the image capture process, etc.

Final remarks

The TUBSRobustCheck is a PyTorch-based, architecture-agnostic toolbox that tries to address diverse challenging scenarios that semantic segmentation autonomous systems may face in the wild. It contains the most common benchmarks to evaluate the robustness of semantic segmentation neural networks against diverse attacks and corruptions.

I hope this project presentation could show you the importance of investigating the robustness of semantic segmentation neural networks. Please ping me a message in case one any doubts and have a good experience using the TUBSRobustCheck!

Feel free to share our toolbox using the links below 😃