Deep Domain Adaptation - Taxonomy and New Methods

An overview of my master’s thesis project

In this post, I would like to talk a bit about the work I developed under the supervision of Dr. Vladimir Golkov during my master’s thesis at the Computer Vision & Artificial Intelligence Group of the Technical University of Munich.

To begin with, I believe it is worth explaining how I ended up writing my master’s thesis at TU Munich, since I was pursuing my studies at TU Braunschweig.

When I was planning/looking for a master’s thesis topic, I could not really find anything that got my attention at TU Braunschweig. Then, I decided to look around and, after a few days and emails, I was fortunate to get to know Dr. Golkov from TU Munich. As I planned to learn the behind the scenes of deep learning, before accepting to write my master’s thesis at TU Munich I asked Vladimir about the potential topics I could work on. He offered me a range of topics, but one of them really stood out in the crowd: understanding deep learning. That was instantly a match for me, as I was definitely searching for something to comprehensively understand deep learning and its concepts.

After a year reading hundreds of papers, having tons of fruitful discussions, and constantly coding, I successfully presented my master’s thesis at TU Braunschweig. Though still an ongoing work, below I present a draft of it with some discussion for those who might be interested. An article on arXiv will also appear soon 😄.

Data-efficiency in Deep Learning

Deep learning-based methods rely on many strategies to achieve data-efficiency. One of the most common techniques is to endow models with prior knowledge about the underlying problem by using neural network layers with purposefully tailored properties. This approach lies at the heart of deep learning, but is quite often overlooked by machine learning practitioners.

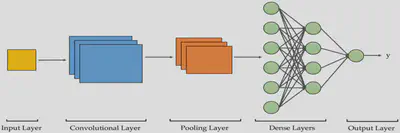

Let’s take a look at the prominent example of convolutional neural networks (CNNs).

CNNs are empowered with convolutional and pooling layers, which leverage them with translation equivariance and scale separation capabilities (not to mention the deep natural image initialization prior - but that’s worth another post).

These aspects of CNNs are essentially what make them shine, as they substantially reduce the number of parameters, the associated computational cost, and the amount of data needed for training. Interestingly (and perhaps unnoticed by many), at a more macroscopic level, certain architectural design and training strategies are also known to greatly benefit neural networks’ performance. These strategies can be very diverse and range from a simple cluster assumption to more complex concepts such as adversarial learning. However, there is one interesting difference. Namely, there is evidence that the latter strategies pose a more abstract power, being transferable across different data types and tasks. The problem is that there are neither guidelines about when using such strategies is beneficial, nor clear explanations about how they help to solve issues in deep learning approaches.

Deep Domain Adaptation: Taxonomy and New Methods

In my master’s thesis, I researched about design strategies (patterns) and tried to understand the context in which they are employed and what problems they try to solve. In other words, I investigated task- and data-agnostic architectural design patterns for recurring problems in deep learning.

For the analysis, I used an existing “visual language” of the Computer Vision & Artificial Intelligence Group. The idea behind this visual language is to abstract out neural networks’ topological details, such as type and number of layers, and therefore to provide a common language to analyze deep learning methods. By bringing diverse methods under the same umbrella, I could identify more than 20 design patterns employed throughout deep learning. Furthermore, to understand the machine learning scenario in which each design pattern is commonly employed, I proposed an ontology of machine learning tasks. Combining the list of design patterns and the ontology of machine learning tasks, a methodology for a more structured design of novel deep learning approaches was possible.

As a proof of concept of the proposed methodology, I extended an existing unsupervised (self-supervised contrastive) deep domain adaptation approach by injecting design patterns into it. Interestingly, the changes leveraged state-of-the-art performance on the popular and challenging VisDA-2017 domain adaptation benchmark, providing empirical evidence that design patterns are interchangeable across distinct machine learning tasks and data types.

For now, I am still working on this topic and doing additional experiments. Nonetheless, I am confident that the proposed methodology will be the starting point of a more structured analysis of deep learning approaches, and that it will provide guidelines for the design of novel deep learning approaches.

A paper about it is expected to be released soon. So, be tuned!